It's a glorified autocomplete function.

That's all it is.

And people are somehow claiming intelligence, innovation, imagination, creativity, invention and consciousness to them.

Nope.

If anything, it proves how dumb the average human is - a bit like the Turing test, it's not really a test of if the AI is intelligent, it's a test of how gullible/dumb the human testers are. It's misused an awful lot to try to claim new intelligence, but it doesn't actually measure that at all.

The irony is, it's not just a glorified autocomplete function, it's an extremely resource-heavy, poor, unpredictable and *easily compromised* one. Just a few sentences and you can "convince" it to go against all its explicit training to do things that it should never be doing.

And every time someone suggests we should use it, I point at data protection laws, the fact that we have no idea what it's actually doing with any training data whatsoever, and that almost all LLMs and moderns AIs out there are trained on data of very dubious origin.

Some examples I've used to demonstrate from various LLMs include:

- Asked it about fire extinguishers. It literally got everything backwards and recommended a dangerous extinguisher on a particular fire more times than the correct one.

- Asked it about a character that doesn't exist in a well-known TV programme that does. It made up characters by merging similarly-named characters from other TV shows and attributed characteristics from some actual characters randomly to those "invented" non-existent ones... including actor's names, plot elements, etc. So you had actors who'd never appeared in the show "portraying" characters that didn't exist in the show, with plot elements that never happened in the show. No matter how much probed it or changed names, it asserted utterly confidently that in a TV show with only 4 main characters that almost every single name you gave it was an actual character in it and made up bios for them. It will confidently spew the entire synopsis of every episode (so it "knows" what actually happened or didn't), and then still insert its made up characters into the mix after you ask about them, even though that's quite clearly just rewriting history and those characters never existed.

- An employee of mine was given a research project to source some new kit. They plugged it into ChatGPT (against my wishes). It returned a comprehensive and detailed list with a summary of 10 viable products that met the criteria. 5 literally did not exist. 3 were long-discontinued and contained false data. 2 were unreliable specifications of the available products and were nowhere near ideal for the task. And that's something that all it needed to do was scrape "10 best <products>" and it would have got a far better shopping list immediately.

Not to mention that it can't count, can't follow rules, can't infer anything, etc.

And it never takes much to generate examples like that. In fact, each time someone questions this, I think up a new way off the top of my head that I've never tested, run it through an LLM and get results like the above. It's fine if it asserts a TV character wrongly, no harm can result, but if it can't even get that right why would you ever trust it to do things like autocomplete code in a programming project (sorry, but any company that allows that is just opening itself up to copyright claims down the road, not to mention security etc. issues).

LLMs are glorified autocomplete, and if you're putting your primary customer interface, or your programming code repository, or your book publishing output into the hands of a glorified autocomplete, you deserve everything you get.

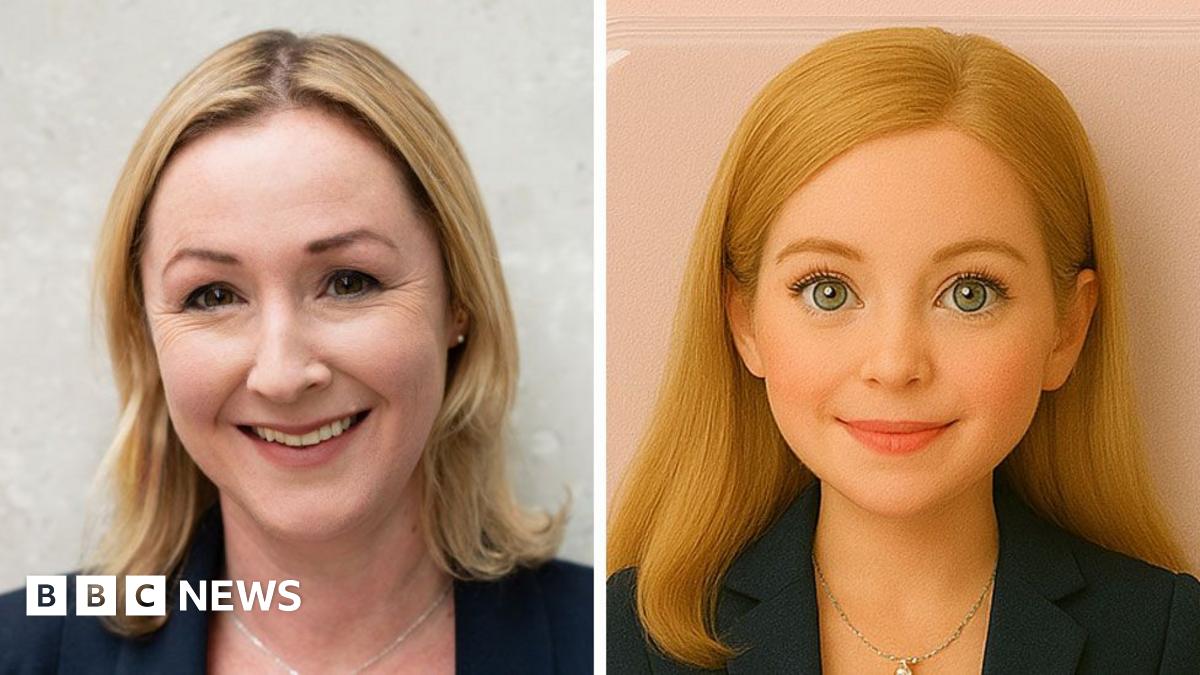

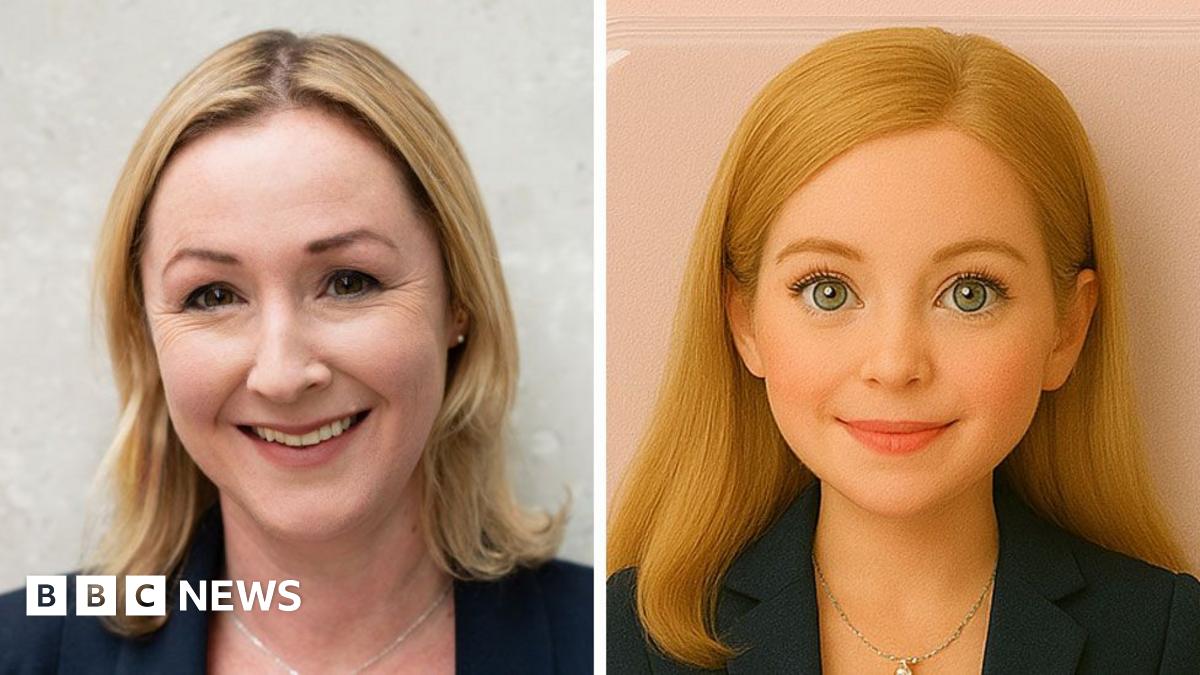

www.bbc.co.uk

www.bbc.co.uk